How to Write a Robots.txt File in Yoast SEO

Search engine crawlers are automated programs that scan the web, following links from one page to another, to index the websites’ content for search engines. One of the ways site owners can communicate with these crawlers is by using a robots.txt file. This text file, placed in the root directory of a website, tells crawlers which pages or sections of a site should not be indexed for search results.

In this article, we’ll discuss the step-by-step method for creating a robots.txt file in Yoast SEO, a popular plugin for WordPress. We’ll also discuss the importance of this file, the default directives set by Yoast SEO, and best practices for editing and using the file.

What is a Robots.txt File?

A robots.txt file is a simple text file that is placed in the root directory of a website. It is used to communicate with web crawlers, also known as “bots” or “spiders,” to tell them which pages or sections of a site should not be indexed. This file is not mandatory, but having one in place is a good practice.

This file can be used for various reasons, such as blocking access to sensitive files on your server or preventing duplicate or non-public pages from being indexed. It can also be used to indicate the location of your XML sitemap (sitemap_index.xml) to the crawlers.

Why is a Robots.txt Important?

A robots.txt file is an important tool for site owners to have control over how search engines interact with their websites. Without it, search engine crawlers would index all pages on your site, including those that you may not want to be publicly available.

For example, you may have a development or staging site that you want to avoid being indexed or pages that are password protected or under construction. Using the robots.txt file, you can block access to these pages and prevent them from appearing in search engine results.

A robots.txt file can also be used to manage the crawl rate, the speed at which search engines crawl your site. By setting a crawl-delay directive, you can slow down the rate at which crawlers access your site, which can help to conserve server resources and prevent your site from being overwhelmed.

The Yoast SEO Default Directives

When you install the Yoast SEO plugin on your WordPress site, it generates a robots.txt file with some default directives. These directives include:

- User-agent: * (applies to all crawlers)

- Disallow: /wp-admin/

- Disallow: /wp-includes/

- Disallow: /wp-content/plugins/

- Disallow: /wp-content/cache/

- Disallow: /wp-content/themes/

- Disallow: /trackback/

- Disallow: /feed/

- Disallow: /comments/

- Disallow: /category/

- Disallow: */trackback/

- Disallow: */feed/

- Disallow: */comments/

- Disallow: /?

- Disallow: /*?

- Sitemap: https://yourdomain.com/sitemap_index.xml

These directives block access to the wp-admin, wp-includes, and wp-content folders, as well as trackbacks, comments, and feed pages. Additionally, it disallows pages with query strings and category archives and includes a directive to the XML sitemap.

How to Create a Robots.txt File in Yoast SEO

Creating a robots.txt file in Yoast SEO is a simple process. Follow these steps:

- Log in to your WordPress website

- Click on ‘Yoast SEO’ in the admin menu

- Click on ‘Tools’

- Click on ‘File Editor’

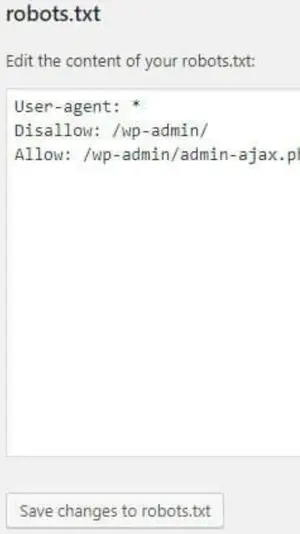

- Click the Create robots.txt file button

- View (or edit) the file generated by Yoast SEO

- Save changes to robots.txt

It’s important to note that while Yoast SEO generates a robots.txt file with default directives, you can edit the file to suit your specific needs. This can include adding directives for specific user agents, such as “User-agent: Bingbot”, or blocking access to specific pages with the “Disallow” directive.

Robots.txt Best Practices

When editing your robots.txt file, it’s important to follow best practices to ensure that it is effective and does not cause any issues for your website.

- Block duplicate and non-public pages. Use the robots.txt file to block access to pages that are either duplicates or not meant for public consumption, such as development or staging sites.

- Use the correct syntax. The robots.txt file uses a specific syntax that must be followed. You must use the correct format for the “User-agent” and “Disallow” directives.

- Use the “Noindex” directive. If you don’t want a page to be indexed, use the “Noindex” meta tag rather than blocking access with the robots.txt file.

- Utilize the “Crawl-Delay” directive. If your site is experiencing high traffic or server strain, use the “Crawl-delay” directive to slow down the rate at which crawlers access your site.

- Use the “Allow” directive. If you want to allow access to a specific page or folder that is blocked by a more general disallow directive, you can use the “Allow” directive to do so.

- Keep it up-to-date. Regularly review and update your robots.txt file to ensure that it is still relevant and effective.

Types of Robots.txt Directives

The User-Agent Directive

The “User-agent” directive is used to specify which search engine crawler the directive applies to. The default value is “*”, which applies to all crawlers. You can also specify specific crawlers, such as “User-agent: Googlebot” to only apply the directive to the Googlebot crawler.

The Disallow Directive

The “Disallow” directive blocks access to specific pages or folders on your site. For example, “Disallow: /wp-admin/” would block access to the wp-admin folder.

The Allow Directive

The “Allow” directive overrides a more general “Disallow” directive and allows access to a specific page or folder. This directive should be used in conjunction with the “Disallow” directive.

Noindex Directive

The “Noindex” directive, also called the “meta robots” tag, indicates to search engines that a page should not be indexed. This is different from the “Disallow” directive in robots.txt, which altogether blocks access to the page.

Crawl-Delay Directive

The “Crawl-delay” directive is used to specify the number of seconds that a search engine should wait between requests to your site. This can be useful for managing server resources and preventing your site from being overwhelmed by high traffic or frequent crawling. It’s important to note that not all search engines honor this directive, so it should be used in conjunction with other methods to manage crawl rates.

Robots.txt: Final Thoughts

A robots.txt file is an essential tool for site owners to have control over how search engines interact with their websites. The Yoast SEO plugin makes it easy to create and edit this file and provides default directives to block access to sensitive files and sections of your site.

When editing your robots.txt file, you are following best practices, and using the correct syntax is important. Additionally, use the “Noindex” directive for pages that should not be indexed, and utilize the “Crawl-Delay” directive to manage the crawl rate.

Top SEO FAQs and Questions

Welcome to the Digital Results guide to the top SEO FAQs and questions. We will provide you with valuable insights and tips to optimize your website and boost your online visibility. Whether you’re a beginner or an experienced marketer, these guides will help you understand SEO and address some of the most common questions and concerns.

Ready to Grow Your Search Engine Results?

Let Digital Results assist you in your SEO strategy and help

deliver the search engine results you need.